In the past decade, artificial intelligence has made great progress, and it has surpassed human beings to some extent in image and speech recognition. Through feedback rewards, individual AI agents can also defeat humans in video games such as Atari and Go.

But the true intelligence of humans also includes social and collective intelligence, which is also essential for the realization of general intelligence. Just like the intelligence of a single ant is limited, they can hunt through cooperation, build a nest, and start a war. There is no doubt that the next challenge for artificial intelligence is to allow large-scale AI agents to learn human-level collaboration and competition.

An example of collaboration is real-time strategy games like StarCraft. Lei Fengwang (public number: Lei Feng network) news, recent Alibaba and London University researchers, in a paper shows how to use StarCraft as a test scenario, let the agents work together, in many to one and many To defeat the enemy many times.

Cover attack

This research focuses on micro-management tasks in the interstellar space. Each player controls his own unit and annihilates opponents under different terrain conditions. Games such as StarCraft may be the most difficult for computers because they are much more complex than Go. The main challenge facing this large-scale multi-agent system learning is that the parameter space will increase exponentially with the increase in the number of participants.

The researchers let the multi-agent learn the battle in the interstellar as a zero and random game. In order to form a scalable and effective communication protocol, researchers have introduced a multi-agent two-way coordination network, BiCNet, through which agents can communicate. In addition, the concept of dynamic grouping and parameter sharing was also introduced in the research to solve the scalability problem.

Run a strategy

BiCNet can handle different types of battles under different terrains, and both parties have different numbers of AI agents during the battle.

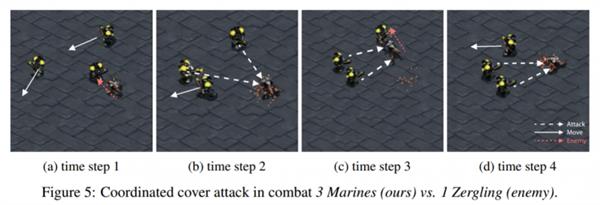

Analysis shows that without any supervision such as human demonstration or tag data, BiCNet can also learn various coordination strategies that are similar to those experienced by experienced players, such as moving without causing conflicts. Basic strategies such as playing and running, as well as advanced techniques such as cover attack and moderate concentration of firepower.

In addition, BiCNet can easily adapt to heterogeneous agent tasks. In the experiment, the researchers evaluated the network according to different scenarios and found that it performed well and had potential value in large-scale practical applications.

The study also found that there is a strong correlation between the specified rewards and learning strategies. Researchers plan to further study this relationship, investigate how strategies are delivered in the agent network, and whether specific languages ​​will appear. In addition, when both parties operate through an in-depth multi-agent model, the Nash equilibrium discussion is also very meaningful.

Led Membrane Switches,Led Membrane Switch Button,Led Membrane Switch Panels,Mechanical Led Membrane Switches

KEDA MEMBRANE TECHNOLOGY CO., LTD , https://www.kedamembrane.com